>>>/@LOWCOSTCOSPLAY/2028554009997685168

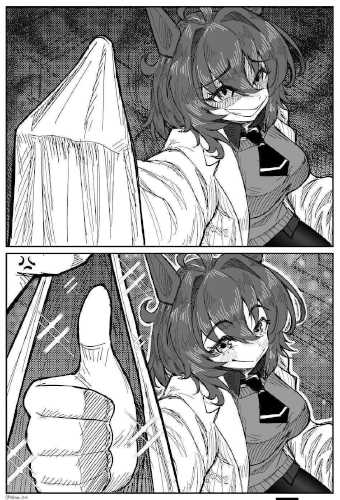

!ReneWy59x. Expand Last 100/人◕ ‿‿ ◕人\ oh god

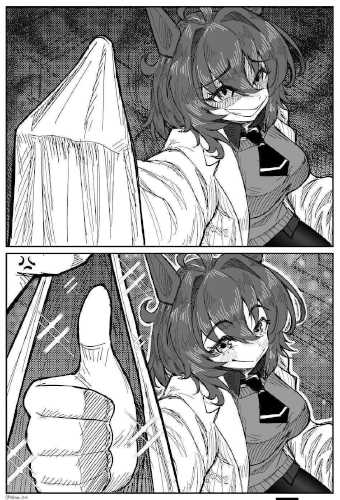

>>>/@LOWCOSTCOSPLAY/2013130561729298768

/人◕ ‿‿ ◕人\

steal his look

/人◕ ‿‿ ◕人\

peace in the middle east

!ReneWy59x. Expand Last 100>>>/@LOWCOSTCOSPLAY/2028554009997685168

/人◕ ‿‿ ◕人\ oh god

>>>/@LOWCOSTCOSPLAY/2013130561729298768

/人◕ ‿‿ ◕人\

steal his look

/人◕ ‿‿ ◕人\

peace in the middle east